GAS TURBINE & RECIPROCATING ENGINE

DECISIONS UPDATE

March 2018

Table of Contents

Deep Knowledge within Analytics is key to

successful IIoT implementation in a Power Plant

Optimization of Emissions Treatment

Chemicals with Seeq Corp. Data Analytics

Data Analytics is a Major

Subject in the Monthly IIoT Webinars

In the next two articles Emerson

and Seeq address the need for

subject matter expertise to make data analytics valuable. Emerson talks about

“deep equipment knowledge.” Seeq

talks about supplying the “brawn” but needing the client “brain” input.

Relative to deep equipment knowledge, there is general agreement that the

industry is losing its most experienced experts at an alarming rate. Relative to

the the client “brain”, the industry is characterized by silos in part caused by

an avalanche of new information which is impossible to assimilate. Data

analytics will rapidly increase the size of the avalanche.

The McIlvaine Company was formed 44 years ago on the premise that knowledge

systems would provide a valuable tool to capture the wisdom of the experts and

ensure that their contribution would not end with their retirement. Over the

years the number of available knowledge systems has expanded. The term

“decisions” has replaced “knowledge” to indicate the action potential.

All the data analytics pyramids are topped with “wisdom”. The McIlvaine Decision

Systems are an integral part of the broader Industrial Internet of Wisdom

(IIoW). These systems can be used by the new hires to help them become subject

matter experts (SME’s). The top level SME’s can lead and leverage the Decision

Systems to become subject matter ultra-experts (SMUE’s). Those suppliers of data

analytics software can use the Decision Systems to help their clients make

better choices rather than just rely entirely on the client input. More details

are found at

Navigating the Sea Change in the Combust, Flow and Treat Markets.

Deep Knowledge

within Analytics is key to successful IIoT implementation in a Power Plant

IoT and automation helps plants remain competitive by improving their

performance in many areas including: reduced outages, extended life of

equipment, reduced maintenance costs, improved heat-rate, reduced emissions,

reduced HS&E incidents, improved response time and enhanced productivity.

This is achieved through digital transformation of how the plant is run and

maintained, using outcome-focused service strategies based on external connected

services for equipment condition monitoring and maintenance management, which in

turn is based on automatic data collection and analysis, and ultimately on a

digital ecosystem.

Predictive analytics software helps in the interpretation of data streaming in

from equipment sensors to diagnose signs of trouble early. This analytics

software must be easy for reliability engineers and maintenance technicians to

use. Simple apps should warn them if there is a potential problem with pumps,

gearboxes, cooling towers, compressors, blowers, heat exchangers, fans and

air-cooled heat exchangers with plain-text, actionable information. To get this

level of ease-of-use, plants use specialized equipment analytics apps rather

than generic data analytics tools. At the highest level, the analytics app

displays equipment health as a simple dashboard, allowing the user to drill down

into details.

Deep equipment knowledge is contained within the analytics to predict specific

equipment problems. The apps perform real-time analytics on multiple variables

such as vibration, temperature and pressure as the data is coming in from the

sensors.

Analytics pick up developing trends, instability and changes in background noise

in real-time in the early stages, thus predicting failure and fouling. This

gives maintenance a chance to schedule service in advance, and if the problem is

caused by operations, they can make changes to prevent it from getting worse.

In the case of a pump, issues like developing strainer blockage, bearing

failure, mechanical seal failure and pre-cavitation are presented as

easy-to-understand plain text. Engineers need not have data analytics skills to

interpret the data because the diagnostics are descriptive and actionable.

'Pre-cavitation' tells personnel there probably is a blockage, so they will

check for closed upstream and downstream valves or a plugged strainer.

The full text of this analysis by Jonas Berge, Director of Applied Technology at

Emerson Automation Solutions is found at

http://www.powerengineeringint.com/articles/print/volume-25/issue-8/features/fleet-management-and-the-iiot.html

Optimization of Emissions

Treatment Chemicals with Seeq Corp. Data Analytics

A large coal-fired power generation facility located in the Western U.S. faced a

difficult task. Like most such plants, the facility was required to control its

emissions, such as sulfur in the form of SOx, nitrogen in the form of

NOx, mercury (Hg), and various carbon compounds. A facility of this

type typically reduces emissions through a combination of capital investments

(such as a scrubber, selective catalytic reduction [SCR] system, and fabric

filter) and ongoing material costs (such as lime, NH3, specialty

scavengers, and oxidizers)—as well as catalyst beds, filters, and sludge

disposal.

Units often over treat flue gas in order to ensure compliance, but this

increases mitigant costs, and can result in reliability/longevity issues,

contributing to high maintenance costs or even downtime. For example, some Hg

mitigants are corrosive, and NH3 degrades the SCR catalyst, resulting

in higher maintenance costs.

In this particular case, the plant was working in collaboration with its

chemical vendor to control Hg using a new additive, calcium bromide, in place of

activated carbon injection in the exhaust stream. The liquid calcium bromide was

added to the raw coal before processing. Before the application of data

analytics, the approach was to overfeed chemicals to ensure compliance, then to

make conservative manual reductions to the application rate over a period of

months and observe how emissions results were affected.

The plant received about five 400-gallon totes of calcium bromide per month, a

total of about 20,000 pounds, at a cost of about $37,000 total. The additive was

applied to the raw coal via a small pump mounted on a temporary skid.

The plant had a conservative internal average target value of 1.0 μg/m3 Hg

in the emissions. When the plant was at full capacity, which was the preference,

Hg hovered at or just above 1.0. When the plant was operating at medium and low

output, the Hg emissions dipped down as low as 0.1.

Ideally, additive use would be adjusted at lower loads to the exact amount

required, cutting the amount spent on the additive, and reducing the negative

effect on mechanical integrity resulting from over-application of additive. This

was not possible with the then-current system, because the additive feed rate

was continuously adjusted per a linear relationship with coal feed rate, even

though the effect of the additive on Hg levels in the stack was non-linear.

There was also a lag time between the addition of the additive and the effect on

Hg levels, further complicating feed rate control. The problem wasn’t a big

issue at the time, but was likely to become more troublesome in the future

because the plant anticipated medium- and low-output conditions would occur more

frequently.

If the plant could save just one tote of chemical per month, it would equate to

about $7,500 per month, or approximately $90,000 per year, in savings.

Furthermore, the plant expected to conduct further optimization of the

additive-emissions curve relationship for even finer tuning at medium- and

low-load operations.

Some key questions required answering in order to optimize additive feed rate.

The questions were:

Does the pump feed rate zero when the curve (coal rate versus additive feed

rate) goes below zero for chemical rate, or was a minimum rate maintained?

Should the pump be calibrated with a non-linear curve, or with separate

linear curves for different regions of operations?

Was there a better way to optimize feed rate?

To answer the questions, data analytic steps were taken in a collaborative

effort between plant and Seeq personnel. Seeq data analytics software was

applied to data residing in the plant’s Ovation control system, just one of the

many data sources for which Seeq provides direct connection.

The first step was to build a chemical addition pump-setting signal in gallons

per hour, based on a linear relationship with the coal feed. This showed how

well the pump settings performed based on what was learned in the data analysis,

with the aim being optimization of the pump-curve relationship. Different

conditions were created for the various chemical addition test periods as the

pump settings were tweaked, and the results were spliced together over time,

using the Splice function in the software.

Next, the power generation signal was queued up and the Value Search tool was

applied to identify high-, medium-, and low-load conditions. Also using Value

Search, conditions were identified when Hg was less than the 1.0 target.

The team then created composite conditions identifying when the chemical

addition could be more tightly controlled. In this case, that was when the plant

was at medium or low load, and stack Hg emissions were less than 1.0. Plant

personnel were then able to quantify how much chemical was overfed to the system

during medium- or low-load periods.

A ratio of the actual Hg emissions to the target was created, and then the

percent difference was applied to the treatment chemical rate. In other words,

if Hg was 30% lower than target, the assumption was that the chemical was being

overfed by 30%. The final step was to aggregate and normalize the total volume

of wasted Hg mitigant chemical during the target condition periods, totalized

over various time periods at different pump settings when the chemical was being

overfed.

The results were telling. Calculating the gallons of excess chemical fed during

overtreatment periods showed that changes made to the pump setting reduced the

over-application of chemical during medium-load condition rates by 50%. The

plant expected to dip down into medium and low rates more in the future, so

these chemical application rate optimizations were expected to become more

valuable

The full article by Michael Risse of Seeq is found at

http://www.powermag.com/using-data-analytics-to-improve-operations-and-maintenance/?pagenum=4

Data Analytics is a Major Subject

in the Monthly IIoT Webinars

In conjunction with

N031 Industrial IOT and Remote O&M,

McIlvaine has conducted and recorded more than 15 webinars. Many are

industry-focused. Some are product-focused. Data Analytics is addressed in most

of them. Even if you are not a subscriber you can view and listen to the

recordings from links at

http://home.mcilvainecompany.com/index.php/component/content/article/28-energy/675-hot-topic-hour-info#weekly

There are more than 1,000 individual slide titles. Using “Find” one can see the following displays for Seeq:

|

Seeq is the Google of Industrial Process Data |

|

Seeq Harnessing the Power of Available Data |

|

Seeq - Choose the Right Data Management and Visualization Components |

|

Seeq Interactive, Visual Tools to Analyze Industrial Process Data |

Here are the slide titles in the presentation in our data analytics session by

Scott Affelt of

XMPlR.

|

DAT |

1 |

Key Opportunities for Data Analytics |

|

|

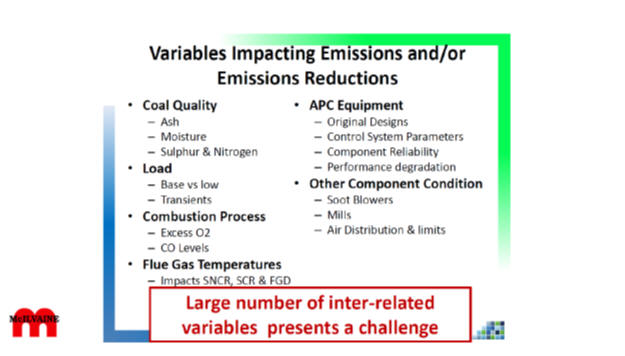

DAT |

2 |

Variables Impacting Emissions and/or Emissions Reductions |

|

|

DAT |

3 |

Approaches to Data Analytics |

|

|

DAT |

4 |

Areas to Apply Data Analytics |

|

|

DAT |

5 |

Opposing Variables Makes it a Challenge |

|

|

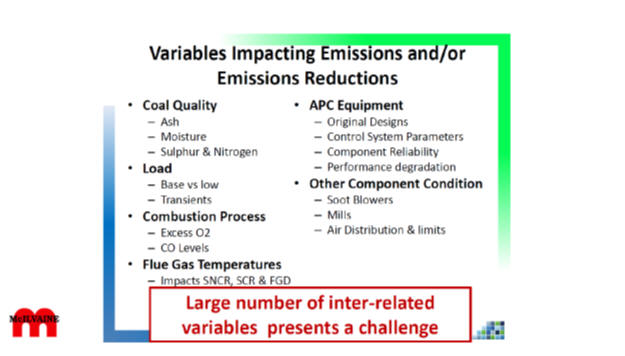

DAT |

6 |

Holistic Optimization Approach |

|

|

DAT |

7 |

Analytic Approaches

(Experienced-based Models0 |

|

|

DAT |

8 |

Analytic Approaches (Data-based Models) |

|

|

DAT |

9 |

Analytic Approaches (Physics-based Models) |

|

|

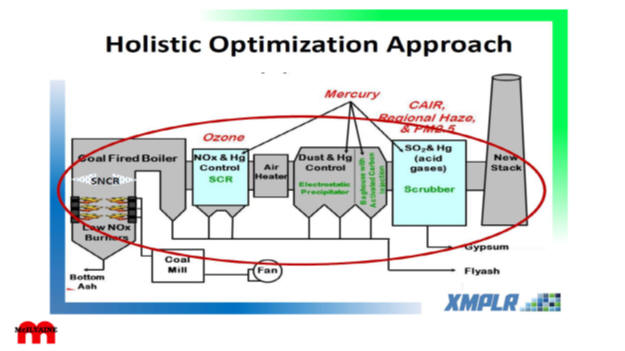

DAT |

10 |

Analytics Approaches (Hybrid Models) |

Scott lists the many variables impacting emission reduction which are

inter-related and present a challenge.

Scott lists the many variables impacting emission reduction which are

inter-related and present a challenge.

In addition to these variables you have variables requiring what Jonas Berge

calls the “deep equipment knowledge.” This extends to both control and on/off

valves, pumps, filters, scrubbers, and conveyors. There are also instrumentation

variables and variables in the consumables such as lime, limestone, activated

carbon and other treatment chemicals including those added to the scrubber to

prevent mercury re-emissions.

Scott discusses the holistic optimization approach which includes potentially

multiple injection points for mercury reduction chemicals.

In his final slide, Scott recommends a hybrid analytic model which includes the

three approaches: physics, data-based and experienced-based.

The experience approach needs to take into account the wisdom of the many rather

than the few. There are more than 2,000 large scale power plant air

pollution control systems, which are gaining useful experience. GE states that a

big advantage of its Predix system is the experience on 40,000 turbines.

McIlvaine

44I Coal Fired Power Plant Decisions

has decision systems on each major component and process. It includes case

histories and webinars. The subject matter expert who participates in these

systems can become a subject matter ultra-expert.

Process Innovation. BHE Energy has access to a complete McIlvaine Decision System for all their coal, gas, wind, and hydro plants as well as their compressor stations. 4S01 Berkshire Hathaway Energy Supplier and Utility Connect. EPA originally rejected the Utah regional haze plan which forced BHE to consider a $700 million modification to reduce NOx control. The only available option was adding SCR with 80% reduction even though the required NOx reduction was modest. SNCR would only make a 30% reduction and more was needed. There were no ready “solutions.” So McIlvaine conducted 9 hours of webinars with 80 participants including Siemens, Emerson, GE, Doosan, AECOM and many equipment and consumables suppliers. The result of these discussions was what BHE labeled the “wisdom of the crowd.” A number of minor investments including ozone injection into the scrubber would comfortably meet the new reduction requirement. The ozone injection had been used in the refining industry but not in coal-fired power plants.

Component Innovation. Stewart Nicholson of Primex has been a pioneer in the

development of dry scrubber technology for coal-fired boilers. He was

instrumental in forming a Dry Scrubbers Users Group and encouraging the

sharing of information among power plants. At the recent annual meeting

there were presentations by several NAES people on the use of dry scrubbers

at two of their plants. These plants use OSIsoft process management systems

with Primex acting as the subject matter expert and continually monitoring

component performance. In several cases, Primex has recommended innovative

improvements in component design and even secured a patent on one

improvement which it has licensed to component manufacturers.

McIlvaine has a dry scrubber Decision Guide and is addressing the challenge of

creating many SMUEs with the high achievement level obtained by Primex. For

example, there have been improvements in the use of Fujikin valves to provide

the critical slurry control. A Decision System on just these types of slurry

valves for this and similar applications will help develop valve SMUES. New

technology, such as catalytic filters, needs to be assessed and warrants its own

Decision Guide. There is a separate user group, dry injection of hydrated lime.

B&W has created a hybrid using DSI and conventional dry scrubbing. So the wisdom

of DSI for SO3

reduction can be utilized in the hybrid system.

The Industrial Internet of Wisdom will be invaluable in empowering IIoT and data

analytics. Seeq and other software vendors can help component suppliers

develop wisdom around gateway systems which link to the larger cloud-based

systems.

Schneider Electric Triconex

hacked at an Industrial Plant

Operations of a plant were halted by a cyber attack by hackers likely working

for a national government. The attack targeted Triconex industrial safety

technology from Schneider Electric SE.

Schneider, as well as cyber security company FireEye, confirmed the attack but

did not identify the victim, industry or location of the attack. Security

company Dragos said the target was somewhere in the Middle East, while CyberX

said the victim was in Saudi Arabia. Schneider issued a security alert to users

of Triconex, which Reuters said is widely used by the energy industry.

“While evidence suggests this was an isolated incident and not due to a

vulnerability in the Triconex system or its program code, we continue to

investigate whether there are additional attack vectors,” the alert said.

The attack is believed to be the first report of a safety system breach at an

industrial plant by hackers. Reuters noted a safety system breach could

allow hackers to attack other parts of an industrial plant and prevent operators

from detecting the hack.

In the incident reported by Schneider and FireEye, hackers used malware called

Triton to take control of a workstation, then worked to reprogram controllers

used to identify safety issues. Operators noticed the attack when some

controllers entered a failsafe mode and caused related processes to shut down.

FireEye said the shutdown was an accident as hackers were probing the system to

see how it worked and learn how to modify safety features.

Schneider is now working with the U.S. Department of Homeland Security to

investigate the attack.

Triton is now the third type of malware known to disrupt industrial processes.

Stuxnet was used in 2010 to attack Iran’s nuclear program, while Crash Override,

otherwise known as Industroyer, was discovered in 2016 in an attack that brought

down power in the Ukraine.

At the

Steam Generator (HRSG) Forum, March

5-7 in Houston, Daniel Azukas of Sargent & Lundy presented Remaining Useful

Life Assessment of HRSGs, a paper co-authored by Marc Lemmons and Raj

Gaikwad, also of Sargent & Lundy, and Rodger Zawodniak and Kelly Harrell of

Associated Electric Cooperative. This paper summarizes various historical

damaging mechanisms encountered in older HRSGs and how the power industry has

optimized design and/or operations and maintenance practices to prolong the life

of these components. The paper presents a case study assessing the Chouteau,

Dell, and St. Francis HRSGs, which were built in the early 2000s. The paper

benchmarks performance against peer averages and assesses operations,

inspections, and equipment for each HRSG.

Evolving Automation for Combined Cycle Units

This presentation by Jim Nyenhuis of Emerson

was presented at the HRSG Forum this week and covered advances in automation.

As power markets and regulations continue to

evolve and sort themselves out, the nature of combined cycle plant operations

must remain flexible in responding to these changes. This flexibility must come

with a minimized impact on plant equipment and operations. This presentation

provides an overview of the evolving nature of process automation to address

specific control challenges being faced by the combined cycle fleet today.

Experience from the field is showing that advanced controls can provide better

performance with issues such as undersized, worn out or leaking field equipment,

issues with non-linear process responses associated with inadequate actuation or

ill-suited valve trim characteristics and process disturbances with longer time

delays such as steam attemperation or NOx injection for SCR’s. This

presentation will focus on areas within the combined cycle process where

limitations in conventional control applications can exist and how advanced

control capabilities are addressing some of these limitations. Also being

discussed will be current developments in automation technology which are making

the use of advanced control capabilities more approachable and sustainable at

the site level along with specific examples of benefits from ongoing

commissioning of these types of control strategies in the field.

Why does FAC Continue to be the Number One Problem in HRSGs?

This presentation by Barry Dooley of

Structural Integrity was presented at the HRSG Forum this week and covered

causes of FAC

Flow Accelerated Corrosion (FAC) has been the

leading cause of HRSG tube/pipe failures for many years and has caused a number

of fatalities in the power generation industry. The causes of FAC, the methods

to detect and monitor the presence of active FAC in the HRSG, and the corrective

actions effective in preventing FAC were clearly identified over 10 years ago.

Countless technical papers, magazine articles and conference presentations have

been published and presented on FAC, yet it continues to be the leading cause of

HRSG tube/pipe failures. This presentation will explore why this is the case and

how to reverse this unfortunate and dangerous trend.

EUEC had Lots of Good Gas

Turbine Papers

EUEC was held in San Diego from March 5-7. There were 400 papers presented to

some 2,000 attendees. Some of the valuable papers are listed below. They are

available for sale by contacting

http://www.euec.com/

McIlvaine Company

Northfield, IL 60093-2743

Tel:

847-784-0012; Fax:

847-784-0061

E-mail:

editor@mcilvainecompany.com

Web site:

www.mcilvainecompany.com